| |

ID |

Date |

Author |

Subject |

Project |

|

|

4

|

Fri Mar 31 11:36:54 2017 |

Brian Clark, Hannah Hasan, Jude Rajasekera, and Carl Pfendner | Installing Software Pre-Requisites for Simulation and Analysis | Software | Instructions on installing simulation software prerequisites (ROOT, Boost, etc) on Linux computers. |

| Attachment 1: installation.pdf

|

| Attachment 2: Installation-Instructions.tar.gz

|

|

|

6

|

Tue Apr 25 10:22:50 2017 |

Jude Rajasekera | ShelfMC Cluster Runs | Software | Doing large runs of ShelfMC can be time intensive. However, if you have access to a computing cluster like Ruby or KingBee, where you are given a node with multiple processors, ShelfMC runs can be optimized by utilizing all available processors on a node. The multithread_shelfmc.sh script automates these runs for you. The script and instructions are attached below. |

| Attachment 1: multithread_shelfmc.sh

|

#!/bin/bash

#Jude Rajasekera 3/20/2017

shelfmcDir=/users/PCON0003/cond0091/ShelfMC #put your shelfmc directory address here

runName='TestRun' #name of run

NNU=500000 #total NNU per run

seed=42 #initial seed for every run,each processor will recieve a different seed (42,43,44,45...)

NNU="$(($NNU / 20))" #calculating NNU per processor, change 20 to however many processor your cluster has per node

ppn=20 #processors per node

########################### make changes for input.txt file here #####################################################

input1="#inputs for ARIANNA simulation, do not change order unless you change ReadInput()"

input2="$NNU #NNU, setting to 1 for unique neutrino"

input3="$seed #seed Seed for Rand3"

input4="18.0 #EXPONENT, !should be exclusive with SPECTRUM"

input5="1000 #ATGap, m, distance between stations"

input6="4 #ST_TYPE, !restrict to 4 now!"

input7="4 #N_Ant_perST, not to be confused with ST_TYPE above"

input8="2 #N_Ant_Trigger, this is the minimum number of AT to trigger"

input9="30 #Z for ST_TYPE=2"

input10="575 #ICETHICK, thickness of ice including firn, 575m at Moore's Bay"

input11="1 #FIRN, KD: ensure DEPTH_DEPENDENT is off if FIRN is 0"

input12="1.30 #NFIRN 1.30"

input13="$122 #FIRNDEPTH in meters"

input14="1 #NROWS 12 initially, set to 3 for HEXAGONAL"

input15="1 #NCOLS 12 initially, set to 5 for HEXAGONAL"

input16="0 #SCATTER"

input17="1 #SCATTER_WIDTH,how many times wider after scattering"

input18="0 #SPECTRUM, use spectrum, ! was 1 initially!"

input19="0 #DIPOLE, add a dipole to the station, useful for st_type=0 and 2"

input20="0 #CONST_ATTENLENGTH, use constant attenuation length if ==1"

input21="1000 #ATTEN_UP, this is the conjuction of the plot attenlength_up and attlength_down when setting REFLECT_RATE=0.5(3dB)"

input22="250 #ATTEN_DOWN, this is the average attenlength_down before Minna Bluff measurement(not used anymore except for CONST_ATTENLENGTH)"

input23="4 #NSIGMA, threshold of trigger"

input24="1 #ATTEN_FACTOR, change of the attenuation length"

input25="1 #REFLECT_RATE,power reflection rate at the ice bottom"

input26="0 #GZK, 1 means using GZK flux, 0 means E-2 flux"

input27="0 #FANFLUX, use fenfang's flux which only covers from 10^17 eV to 10^20 eV"

input28="0 #WIDESPECTRUM, use 10^16 eV to 10^21.5 eV as the energy spectrum, otherwise use 17-20"

input29="1 #SHADOWING"

input30="1 #DEPTH_DEPENDENT_N;0 means uniform firn, 1 means n_firn is a function of depth"

input31="0 #HEXAGONAL"

input32="1 #SIGNAL_FLUCT 1=add noise fluctuation to signal or 0=do not"

input33="4.0 #GAINV gain dependency"

input34="1 #TAUREGENERATION if 1=tau regeneration effect, if 0=original"

input35="3.0 #ST4_R radius in meters between center of station and antenna"

input36="350 #TNOISE noise temperature in Kelvin"

input37="80 #FREQ_LOW low frequency of LPDA Response MHz #was 100"

input38="1000 #FREQ_HIGH high frequency of LPDA Response MHz"

input39="/users/PCON0003/cond0091/ShelfMC/temp/LP_gain_manual.txt #GAINFILENAME"

###########################################################################################

cd $shelfmcDir #cd to dir containing shelfmc

mkdir $runName #make a folder for run

cd $runName #cd into run folder

initSeed=$seed

for (( i=1; i<=$ppn;i++)) #make 20 setup files for 20 processors

do

mkdir Setup$i #make setup folder i

cd Setup$i #go into setup folder i

seed="$(($initSeed+$i-1))" #calculate seed for this iteration

input3="$seed #seed Seed for Rand3" #save new input line

for j in {1..40} #print all input.txt lines

do

lineName=input$j

echo "${!lineName}" >> input.txt #print line to input.txt file

done

cd ..

done

pwd=`pwd`

#create job file

echo '#!/bin/bash' >> run_shelfmc_multithread.sh

echo '#PBS -l nodes=1:ppn='$ppn >> run_shelfmc_multithread.sh #change depending on processors per node

echo '#PBS -l walltime=00:05:00' >> run_shelfmc_multithread.sh #change walltime depending on run size, will be 20x shorter than single processor run time

echo '#PBS -N shelfmc_'$runName'_job' >> run_shelfmc_multithread.sh

echo '#PBS -j oe' >> run_shelfmc_multithread.sh

echo '#PBS -A PCON0003' >> run_shelfmc_multithread.sh #change to specify group

echo 'cd ' $shelfmcDir >> run_shelfmc_multithread.sh

echo 'runName='$runName >> run_shelfmc_multithread.sh

for (( k=1; k<=$ppn;k++))

do

echo './shelfmc_stripped.exe $runName/Setup'$k' _'$k'$runName &' >> run_shelfmc_multithread.sh #execute commands for 20 setup files

done

echo 'wait' >> run_shelfmc_multithread.sh #wait until all runs are finished

echo 'cd $runName' >> run_shelfmc_multithread.sh #go into run folder

echo 'for (( i=1; i<='$ppn';i++)) #20 iterations' >> run_shelfmc_multithread.sh

echo 'do' >> run_shelfmc_multithread.sh

echo ' cd Setup$i #cd into setup dir' >> run_shelfmc_multithread.sh

echo ' mv *.root ..' >> run_shelfmc_multithread.sh #move root files to runDir

echo ' cd ..' >> run_shelfmc_multithread.sh

echo 'done' >> run_shelfmc_multithread.sh

echo 'hadd Result_'$runName'.root *.root' >> run_shelfmc_multithread.sh #add all root files

echo 'rm *ShelfMCTrees*' >> run_shelfmc_multithread.sh #delete all partial root files

chmod u+x run_shelfmc_multithread.sh

echo "Run files created"

echo "cd into run folder and do $ qsub run_shelfmc_multithread.sh"

|

| Attachment 2: multithread_shelfmc_walkthrough.txt

|

This document will explain how to dowload, configure, and run multithread_shelfmc.sh in order to do large runs on computing clusters.

####DOWNLOAD####

1.Download multithread_shelfmc.sh

2.Move multithread_shelfmc.sh into ShelfMC directory

3.Do $chmod u+x multithread_shelfmc.sh

####CONFIGURE###

1.Open multithread_shelfmc.sh

2.On line 3, modify shelfmcDir to your ShelfMC dir

3.On line 6, add your run name

4.On line 7, add the total NNU

5.On line 8, add an intial seed

6.On line 10, specify number of processors per node for your cluster

7.On lines 12-49, edit the input.txt parameters

8.On line 50, add the location of your LP_gain_manual.txt

9.On line 80, specify a wall time for each run, remember this will be about 20x shorter than ShelfMC on a single processor

10.On line 83, Specify the group name for your cluster if needed

11.Save file

####RUN####

1.Do $./multithread_shelfmc.sh

2.There should now be a new directory in the ShelfMC dir with 20 setup files and a run_shelfmc_multithread.sh script

3.Do $qsub run_shelfmc_multithread.sh

###RESULT####

1.After the run has completed, there will be a result .root file in the run directory

|

|

|

7

|

Tue Apr 25 10:35:43 2017 |

Jude Rajasekera | ShelfMC Parameter Space Scan | Software | These scripts allow you to do thousands of ShelfMC runs while varying certain parameters of your choice. As is, the attenuation length, reflection, ice thickness, firn depth, station depth is varied over certain rages; in total, the whole Parameter Space Scan does 5250 runs on a cluster like Ruby or KingBee. The scripts and instructions are attached below. |

| Attachment 1: ParameterSpaceScan_instructions.txt

|

This document will explain how to dowload, configure, and run a parameter space search for ShelfMC on a computing cluster.

These scripts explore the ShelfMC parameter space by varying ATTEN_UP, REFLECT_RATE, ICETHICK, FIRNDEPTH, and STATION_DEPTH for certain rages.

The ranges and increments can be found in setup.sh.

In order to vary STATION_DEPTH, some changes were made to the ShelfMC code. Follow these steps to allow STATION_DEPTH to be an input parameter.

1.cd to ShelfMC directory

2.Do $sed -i -e 's/ATDepth/STATION_DEPTH/g' *.cc

3.Open declaration.hh. Replace line 87 "const double ATDepth = 0.;" with "double STATION_DEPTH;"

4.In functions.cc go to line 1829. This is the ReadInput() method. Add the lines below to the end of this method.

GetNextNumber(inputfile, number); // new line for station Depth

STATION_DEPTH = (double) atof(number.c_str()); //new line

5.Do $make clean all

#######Script Descriptions########

setup.sh -> This script sets up the necessary directories and setup files for all the runs

scheduler.sh -> This script submits and monitors all jobs.

#######DOWNLOAD########

1.Download setup.sh and scheduler.sh

2.Move both files into your ShelfMC directory

3.Do $chmod u+x setup.sh and $chmod u+x scheduler.sh

######CONFIGURE#######

1.Open setup.sh

2.On line 4, modify the job name

3.On line 6, modify group name

4.On line 10, specify your ShelfMC directory

5.On line 13, modify your run name

6.On line 14, specify the NNU per run

7.On line 15, specify the starting seed

8.On line 17, specify the number of processors per node on your cluster

9.On lines 19-56, edit the input.txt parameters that you want to keep constant for every run

10.On line 57, specify the location of the LP_gain_manual.txt

11.On line 126, change walltime depending on total NNU. Remember this wall time will be 20x shorter than a single processor run.

12.On line 127, change job prefix

13.On line 129, change the group name if needed

14.Save file

15.Open scheduler.sh

16.On line 4, specify your ShelfMC directory

17.On line 5, modify run name. Make sure it is the same runName as you have in setup.sh

18.On lines 35 and 39, replace cond0091 with your username for the cluster

19.On line 42, you can pick how many nodes you want to use at any given time. It is set to 6 intially.

20.Save file

#######RUN#######

1.Do $qsub setup.sh

2.Wait for setup.sh to finish. This script is creating the setup files for all runs. This may take about an hour.

3.When setup.sh is done, there should be a new directory in your home directory. Move this directory to your ShelfMC directory.

4.Do $screen to start a new screen that the scheduler can run on. This is incase you lose connection to the cluster mid run.

5.Do $./scheduler.sh to start script. This script automatically submits jobs and lets you see the status of the runs. This will run for several hours.

5.The scheduler makes a text file of all jobs called jobList.txt in the ShelfMC dir. Make sure to delete jobList.txt before starting a whole new run.

######RESULT#######

1.When Completed, there will be a great amount of data in the run files, about 460GB.

2.The run directory is organized in tree, results for particular runs can be found by cd'ing deeper into the tree.

3.In each run directory, there will be a resulting root file, all the setup files, and a log file for the run.

|

| Attachment 2: setup.sh

|

#!/bin/bash

#PBS -l walltime=04:00:00

#PBS -l nodes=1:ppn=1,mem=4000mb

#PBS -N jude_SetupJob

#PBS -j oe

#PBS -A PCON0003

#Jude Rajasekera 3/20/17

#directories

WorkDir=$TMPDIR

tmpShelfmc=$HOME/shelfmc/ShelfMC #set your ShelfMC directory here

#controlled variables for run

runName='ParamSpaceScanDir' #name of run

NNU=500000 #NNU per run

seed=42 #starting seed for every run, each processor will recieve a different seed (42,43,44,45...)

NNU="$(($NNU / 20))" #calculating processors per node, change 20 to however many processors your cluster has per node

ppn=5 #number of processors per node on cluster

########################### input.txt file ####################################################

input1="#inputs for ARIANNA simulation, do not change order unless you change ReadInput()"

input2="$NNU #NNU, setting to 1 for unique neutrino"

input3="$seed #seed Seed for Rand3"

input4="18.0 #EXPONENT, !should be exclusive with SPECTRUM"

input5="1000 #ATGap, m, distance between stations"

input6="4 #ST_TYPE, !restrict to 4 now!"

input7="4 #N_Ant_perST, not to be confused with ST_TYPE above"

input8="2 #N_Ant_Trigger, this is the minimum number of AT to trigger"

input9="30 #Z for ST_TYPE=2"

input10="$T #ICETHICK, thickness of ice including firn, 575m at Moore's Bay"

input11="1 #FIRN, KD: ensure DEPTH_DEPENDENT is off if FIRN is 0"

input12="1.30 #NFIRN 1.30"

input13="$FT #FIRNDEPTH in meters"

input14="1 #NROWS 12 initially, set to 3 for HEXAGONAL"

input15="1 #NCOLS 12 initially, set to 5 for HEXAGONAL"

input16="0 #SCATTER"

input17="1 #SCATTER_WIDTH,how many times wider after scattering"

input18="0 #SPECTRUM, use spectrum, ! was 1 initially!"

input19="0 #DIPOLE, add a dipole to the station, useful for st_type=0 and 2"

input20="0 #CONST_ATTENLENGTH, use constant attenuation length if ==1"

input21="$L #ATTEN_UP, this is the conjuction of the plot attenlength_up and attlength_down when setting REFLECT_RATE=0.5(3dB)"

input22="250 #ATTEN_DOWN, this is the average attenlength_down before Minna Bluff measurement(not used anymore except for CONST_ATTENLENGTH)"

input23="4 #NSIGMA, threshold of trigger"

input24="1 #ATTEN_FACTOR, change of the attenuation length"

input25="$Rval #REFLECT_RATE,power reflection rate at the ice bottom"

input26="0 #GZK, 1 means using GZK flux, 0 means E-2 flux"

input27="0 #FANFLUX, use fenfang's flux which only covers from 10^17 eV to 10^20 eV"

input28="0 #WIDESPECTRUM, use 10^16 eV to 10^21.5 eV as the energy spectrum, otherwise use 17-20"

input29="1 #SHADOWING"

input30="1 #DEPTH_DEPENDENT_N;0 means uniform firn, 1 means n_firn is a function of depth"

input31="0 #HEXAGONAL"

input32="1 #SIGNAL_FLUCT 1=add noise fluctuation to signal or 0=do not"

input33="4.0 #GAINV gain dependency"

input34="1 #TAUREGENERATION if 1=tau regeneration effect, if 0=original"

input35="3.0 #ST4_R radius in meters between center of station and antenna"

input36="350 #TNOISE noise temperature in Kelvin"

input37="80 #FREQ_LOW low frequency of LPDA Response MHz #was 100"

input38="1000 #FREQ_HIGH high frequency of LPDA Response MHz"

input39="/home/rajasekera.3/shelfmc/ShelfMC/temp/LP_gain_manual.txt #GAINFILENAME"

input40="$SD #STATION_DEPTH"

#######################################################################################################

cd $TMPDIR

mkdir $runName

cd $runName

initSeed=$seed

counter=0

for L in {500..1000..100} #attenuation length 500-1000

do

mkdir Atten_Up$L

cd Atten_Up$L

for R in {0..100..25} #Reflection Rate 0-1

do

mkdir ReflectionRate$R

cd ReflectionRate$R

if [ "$R" = "100" ]; then #fixing reflection rate value

Rval="1.0"

else

Rval="0.$R"

fi

for T in {500..2900..400} #Thickness of Ice 500-2900

do

mkdir IceThick$T

cd IceThick$T

for FT in {60..140..20} #Firn Thinckness 60-140

do

mkdir FirnThick$FT

cd FirnThick$FT

for SD in {0..200..50} #Station Depth

do

mkdir StationDepth$SD

cd StationDepth$SD

#####Do file operations###########################################

counter=$((counter+1))

echo "Counter = $counter ; L = $L ; R = $Rval ; T = $T ; FT = $FT ; SD = $SD " #print variables

#define changing lines

input21="$L #ATTEN_UP, this is the conjuction of the plot attenlength_up and attlength_down when setting REFLECT_RATE=0.5(3dB)"

input25="$Rval #REFLECT_RATE,power reflection rate at the ice bottom"

input10="$T #ICETHICK, thickness of ice including firn, 575m at Moore's Bay"

input13="$FT #FIRNDEPTH in meters"

input40="$SD #STATION_DEPTH"

for (( i=1; i<=$ppn;i++)) #make 20 setup files for 20 processors

do

mkdir Setup$i #make setup folder

cd Setup$i #go into setup folder

seed="$(($initSeed + $i -1))" #calculate seed for this iteration

input3="$seed #seed Seed for Rand3"

for j in {1..40} #print all input.txt lines

do

lineName=input$j

echo "${!lineName}" >> input.txt

done

cd ..

done

pwd=`pwd`

#create job file

echo '#!/bin/bash' >> run_shelfmc_multithread.sh

echo '#PBS -l nodes=1:ppn='$ppn >> run_shelfmc_multithread.sh

echo '#PBS -l walltime=00:05:00' >> run_shelfmc_multithread.sh #change walltime as necessary

echo '#PBS -N jude_'$runName'_job' >> run_shelfmc_multithread.sh #change job name as necessary

echo '#PBS -j oe' >> run_shelfmc_multithread.sh

echo '#PBS -A PCON0003' >> run_shelfmc_multithread.sh #change group if necessary

echo 'cd ' $tmpShelfmc >> run_shelfmc_multithread.sh

echo 'runName='$runName >> run_shelfmc_multithread.sh

for (( i=1; i<=$ppn;i++))

do

echo './shelfmc_stripped.exe $runName/'Atten_Up$L'/'ReflectionRate$R'/'IceThick$T'/'FirnThick$FT'/'StationDepth$SD'/Setup'$i' _'$i'$runName &' >> run_shelfmc_multithread.sh

done

# echo './shelfmc_stripped.exe $runName/'Atten_Up$L'/'ReflectionRate$R'/'IceThick$T'/'FirnThick$FT'/'StationDepth$SD'/Setup1 _01$runName &' >> run_shelfmc_multithread.sh

echo 'wait' >> run_shelfmc_multithread.sh

echo 'cd $runName/'Atten_Up$L'/'ReflectionRate$R'/'IceThick$T'/'FirnThick$FT'/'StationDepth$SD >> run_shelfmc_multithread.sh

echo 'for (( i=1; i<='$ppn';i++)) #20 iterations' >> run_shelfmc_multithread.sh

echo 'do' >> run_shelfmc_multithread.sh

echo ' cd Setup$i #cd into setup dir' >> run_shelfmc_multithread.sh

echo ' mv *.root ..' >> run_shelfmc_multithread.sh

echo ' cd ..' >> run_shelfmc_multithread.sh

echo 'done' >> run_shelfmc_multithread.sh

echo 'hadd Result_'$runName'.root *.root' >> run_shelfmc_multithread.sh

echo 'rm *ShelfMCTrees*' >> run_shelfmc_multithread.sh

chmod u+x run_shelfmc_multithread.sh # make executable

##################################################################

cd ..

done

cd ..

done

cd ..

done

cd ..

done

cd ..

done

cd

mv $WorkDir/$runName $HOME

|

| Attachment 3: scheduler.sh

|

#!/bin/bash

#Jude Rajasekera 3/20/17

tmpShelfmc=$HOME/shelfmc/ShelfMC #location of Shelfmc

runName=ParamSpaceScanDir #name of run

cd $tmpShelfmc #move to home directory

if [ ! -f ./jobList.txt ]; then #see if there is an existing job file

echo "Creating new job List"

for L in {500..1000..100} #attenuation length 500-1000

do

for R in {0..100..25} #Reflection Rate 0-1

do

for T in {500..2900..400} #Thickness of Ice 500-2900

do

for FT in {60..140..20} #Firn Thinckness 60-140

do

for SD in {0..200..50} #Station Depth

do

echo "cd $runName/Atten_Up$L/ReflectionRate$R/IceThick$T/FirnThick$FT/StationDepth$SD" >> jobList.txt

done

done

done

done

done

else

echo "Picking up from last job"

fi

numbLeft=$(wc -l < ./jobList.txt)

while [ $numbLeft -gt 0 ];

do

jobs=$(showq | grep "rajasekera.3") #change username here

echo '__________Current Running Jobs__________'

echo "$jobs"

echo ''

runningJobs=$(showq | grep "rajasekera.3" | wc -l) #change unsername here

echo Number of Running Jobs = $runningJobs

echo Number of jobs left = $numbLeft

if [ $runningJobs -le 6 ];then

line=$(head -n 1 jobList.txt)

$line

echo Submit Job && pwd

qsub run_shelfmc_multithread.sh

cd $tmpShelfmc

sed -i 1d jobList.txt

else

echo "Full Capacity"

fi

sleep 1

numbLeft=$(wc -l < ./jobList.txt)

done

|

|

|

Draft

|

Thu Apr 27 18:28:22 2017 |

Sam Stafford (Also Slightly Jacob) | Installing AnitaTools on OSC | Software | Jacob Here, Just want to add how I got AnitaTools to see FFTW:

1) echo $FFTW3_HOME to find where the lib and include dir is.

2) Next add the following line to the start of cmake/modules/FindFFTW.cmake

'set ( FFTW_ROOT full/path/you/got/from/step/1)'

Brief, experience-based instructions on installing the AnitaTools package on the Oakley OSC cluster. |

| Attachment 1: OSC_build.txt

|

Installing AnitaTools on OSC

Sam Stafford

04/27/2017

This document summarizes the issues I encountered installing AnitaTools on the OSC Oakley cluster.

I have indicated work-arounds I made for unexpected issues

I do not know that this is the only valid process

This process was developed by trial-and-error (mostly error) and may contain superfluous steps

A person familiar with AnitaTools and cmake may be able to streamline it

Check out OSC's web site, particularly to find out about MODULES, which facilitate access to pre-installed software

export the following environment variables in your .bash_profile (not .bashrc):

ROOTSYS where you want ROOT to live

install it in somewhere your user directory; at this time, ROOT is not pre-installed on Oakley as far as I can tell

ANITA_UTIL_INSTALL_DIR where you want anitaTools to live

FFTWDIR where fftw is

look on OSC's website to find out where it is; you shouldn't have to install it locally

PATH should contain $FFTWDIR/bin and $ROOTSYS/bin

LD_LIBRARY_PATH should contain $FFTWDIR/lib $ROOTSYS/lib $ANITA_UTIL_INSTALL_DIR/lib

LD_INCLUDE_PATH should contain $FFTWDIR/include $ROOTSYS/include $ANITA_UTIL_INSTALL_DIR/include

also put in your .bash_profile: (I put these after the exports)

module load gnu/4.8.5 // loads g++ compiler

(this should automatically load module fftw/3.3.4 also)

install ROOT - follow ROOT's instructions to build from source. It's a typical (configure / make / make install) sequence

you probably need ./configure --enable-Minuit2

get AnitaTools from github/anitaNeutrino "anitaBuildTool" (see Anita ELOG 672, by Cosmin Deaconu)

Change entry in which_event_reader to ANITA3, if you want to analyze ANITA-3 data

(at least for now; I think they are developing smarts to make the SW adapt automatically to the anita data "version")

Do ./buildAnita.sh //downloads the software and attempts a full build/install

it may file on can't-find-fftw during configure:

system fails to populate environment variable FFTW_ROOT, not sure why

add the following line at beginning of anitaBuildTool/cmake/modules/FindFFTW.cmake:

set( FFTW_ROOT /usr/local/fftw3/3.3.4-gnu)

(this apparently tricks cmake into finding fftw)

NOTE: ./buildAnita.sh always downloads the software from github. IT WILL WIPE OUT ANY CHANGES YOU MADE TO AnitaTools!

Do "make"

May fail with /usr/lib64/libstdc++.so.6: version `GLIBCXX_3.4.14' not found and/or a few other similar messages

or may say c11 is not supported

need to change compiler setting for cmake:

make the following change in anitaBuildTool/build/CMakeCache.txt (points cmake to the g++ compiler instead of default intel/c++)

#CMAKE_CXX_COMPILER:FILEPATH=/usr/bin/c++ (comment this out)

CMAKE_CXX_COMPILER:FILEPATH=/usr/local/gcc/4.8.5/bin/g++ (add this)

(you don't necessarfily have to use version, gcc/4.8.5, but it worked for me)

Then retry by doing "make"

Once make is completed, do "make install"

A couple of notes:

Once AnitaTools is built, if you change source, just do make, then make install (from anitaBuildTool) (don't ./buildAnita.sh; see above)

(actually make install will do the make step if source changes are detected)

To start AnitaTools over from cmake, delete the anitaBuildTool/build directory and run make (not cmake: make will drive cmake for you)

(don't do cmake directly unless you know what you're doing; it'll mess things up)

|

|

|

9

|

Thu May 11 13:43:46 2017 |

Sam Stafford | Notes on installing icemc on OSC | Software | |

| Attachment 1: icemc_setup_osc.txt

|

A few notes about installing icemc on OSC

Dependencies

ROOT - download from CERN and install according to instructions

FFTW - do "module load gnu/4.8.5" (or put it in your .bash_profile)

The environment variable FFTWDIR must contain the directory where FFTW resides

in my case this was /usr/local/fftw3/3.3.4-gnu

set this up in your .bash_profile (not .bashrc)

I copied my working instance of icemc from my laptop to a folder in my osc space

Copy the whole icemc directory (maybe its icemc/trunk, depending on how you installed), EXCEPT for the "output" subdir because it's big and unnecessary

in your icemc directory on OSC, do "mkdir output"

In icemc/Makefile

find a statement like this:

LIBS += -lMathMore $(FFTLIBS) -lAnitaEvent

and modify it to include the directory where the FFTW library is:

LIBS += -L$(FFTWDIR)/lib -lMathMore $(FFTLIBS) -lAnitaEvent

note: FFTLIBS contains the list of libraries (e.g., -lfftw3), NOT the library search paths

Compile by doing "make"

Remember you should set up a batch job on OSC using PBS.

|

|

|

10

|

Thu May 11 14:38:10 2017 |

Sam Stafford | Sample OSC batch job setup | Software | Batch jobs on OSC are initiated through the Portable Batch System (PBS). This is the recommended way to run stuff on OSC clusters.

Attached is a sample PBS script that copies files to temporary storage on the OSC cluster (also recommended) and runs an analysis program.

Info on batch processing is at https://www.osc.edu/supercomputing/batch-processing-at-osc.

This will tell you how to submit and manage batch jobs.

More resources are available at www.osc.edu.

PBS web site: /www.pbsworks.com

The PBS user manual is at www.pbsworks.com/documentation/support/PBSProUserGuide10.4.pdf. |

| Attachment 1: osc_batch_jobs.txt

|

## annotated sample PBS batch job specification for OSC

## Sam Stafford 05/11/2017

#PBS -N j_ai06_${RUN_NUMBER}

##PBS -m abe ## request an email on job completion

#PBS -l mem=16GB ## request 16GB memory

##PBS -l walltime=06:00:00 ## set this in qsub

#PBS -j oe ## merge stdout and stderr into a single output log file

#PBS -A PAS0174

echo "run number " $RUN_NUMBER

echo "cal pulser " $CAL_PULSER

echo "baseline file " $BASELINE_FILE

echo "temp dir is " $TMPDIR

echo "ANITA_DATA_REMOTE_DIR="$ANITA_DATA_REMOTE_DIR

set -x

## copy the files from kingbee to the temporary workspace

## (if you set up public key authentication between kingbee and OSC, you won't need a password; just google "public key authentication")

mkdir $TMPDIR/run${RUN_NUMBER} ## make a directory for this run number

scp stafford.16@kingbee.mps.ohio-state.edu:/data/anita/anita3/flightData/copiedBySam/run${RUN_NUMBER}/calEventFile${RUN_NUMBER}.root $TMPDIR/run${RUN_NUMBER}/calEventFile${RUN_NUMBER}.root

scp stafford.16@kingbee.mps.ohio-state.edu:/data/anita/anita3/flightData/copiedBySam/newerData/run${RUN_NUMBER}/gpsEvent${RUN_NUMBER}.root $TMPDIR/run${RUN_NUMBER}/gpsEvent${RUN_NUMBER}.root

scp stafford.16@kingbee.mps.ohio-state.edu:/data/anita/anita3/flightData/copiedBySam/newerData/run${RUN_NUMBER}/timedHeadFile${RUN_NUMBER}.root $TMPDIR/run${RUN_NUMBER}/timedHeadFile${RUN_NUMBER}.root

scp stafford.16@kingbee.mps.ohio-state.edu:/data/anita/anita3/flightData/copiedBySam/newerData/run${RUN_NUMBER}/decBlindHeadFileV1_${RUN_NUMBER}.root $TMPDIR/run${RUN_NUMBER}/decBlindHeadFileV1_${RUN_NUMBER}.root

## set up the environment variables to point to the temporary work space

export ANITA_DATA_REMOTE_DIR=$TMPDIR

export ANITA_DATA_LOCAL_DIR=$TMPDIR

echo "ANITA_DATA_REMOTE_DIR="$ANITA_DATA_REMOTE_DIR

## run the analysis program

cd analysisSoftware

./analyzerIterator06 ${CAL_PULSER} -S1 -Noverlap --FILTER_OPTION=4 ${BASELINE_FILE} ${RUN_NUMBER} -O

echo "batch job ending"

|

|

|

14

|

Mon Sep 18 12:06:01 2017 |

Oindree Banerjee | How to get anitaBuildTool and icemc set up and working | Software | First try reading and following the instructions here

https://u.osu.edu/icemc/new-members-readme/

Then e-mail me at oindreeb@gmail.com with your problems

|

|

|

20

|

Tue Mar 20 09:24:37 2018 |

Brian Clark | Get Started with Making Plots for IceMC | Software | |

Second, here is the page for the software IceMC, which is the Monte Carlo software for simulating neutrinos for ANITA.

On that page are good instructions for downloading the software and how to run it. You will have the choice of running it on a (1) a personal machine (if you want to use your personal mac or linux machine), (2) a queenbee laptop in the lab, or (3) on a kingbee account which I will send an email about shortly. Running IceMC will require a piece of statistics software called ROOT that can be somewhat challenging to install--it is already installed on Kingbee and OSC, so it is easier to get started there. If you want to use Kingbee, just try downloading and running. If you want to use OSC, you're first going to need to follow instructions to access a version installed on OSC. Still getting that together.

So, familiarize yourself with the command line, and then see if you can get ROOT and IceMC installed and running. Then plots. |

|

|

21

|

Fri Mar 30 12:06:11 2018 |

Brian Clark | Get icemc running on Kingbee and Unity | Software | So, icemc has some needs (like Mathmore) and preferably root 6 that aren't installed on kingbeen and unity.

Here's what I did to get icecmc running on kingbee.

Throughout, $HOME=/home/clark.2668

- Try to install new version fo ROOT (6.08.06, which is the version Jacob uses on OSC) with CMAKE. Failed because Kingbee version of cmake is too old.

- Downloaded new version of CMAKE (3.11.0), failed because kingbee doesn't have C++11 support.

- Downloaded new version of gcc (7.33) and installed that in $HOME/QCtools/source/gcc-7.3. So I installed it "in place".

- Then, compiled the new version of CMAKE, also in place, so it's in $HOME/QCtools/source/cmake-3.11.0.

- Then, tried to compile ROOT, but it got upset because it couldn't find CXX11; so I added "export CC=$HOME/QCtools/source/gcc-7.3/bin/gcc" and then it could find it.

- Then, tried to compile ROOT, but couldn't because ROOT needs >python 2.7, and kingbee has python 2.6.

- So, downloaded latest bleeding edge version of python 3 (pyton 3.6.5), and installed that with optimiation flags. It's installed in $HOME/QCtools/tools/python-3.6.5-build.

- Tried to compile ROOT, and realized that I need to also compile the shared library files for python. So went back and compiled with --enable-shared as an argument to ./configure.

- Had to set the python binary, include, and library files custom in the CMakeCache.txt file.

|

|

|

26

|

Sun Aug 26 19:23:57 2018 |

Brian Clark | Get a quick start with AraSim on OSC Oakley | Software | These are instructions I wrote for Rishabh Khandelwal to facilitate a "fast" start on Oakley at OSC. It was to help him run AraSim in batch jobs on Oakley.

It basically has you use software dependencies that I pre-installed on my OSC account at /users/PAS0654/osu0673/PhasedArraySimulation.

It also gives a "batch_processing" folder with examples for how to successfully run AraSim batch jobs (with correct output file management) on Oakley.

Sourcing these exact dependencies will not work on Owens or Ruby, sorry. |

| Attachment 1: forRishabh.tar.gz

|

|

|

31

|

Thu Dec 13 17:33:54 2018 |

s prohira | parallel jobs on ruby | Software | On ruby, users get charged for the full node, even if you aren't using all 20 cores, so it's a pain if you want to run a bunch of serial jobs. There is, however, a thing called the 'parallel command processor' (pcp) which is provided on ruby, (https://www.osc.edu/resources/available_software/software_list/parallel_command_processor) that makes it very simple.

essentially, you make a text file filled with commands, one command per line, and then you give it to the parallel command processor and it submits each line of your text file as an individual job. the nice thing about this is that you don't have to think about it. you just give it the file and go, and it will use all cores on the full node in the most efficient way possible.

below i provide 2 examples, a very simple one to show you how it works, and a more complicated one. in both files, i make the command file inside of a loop. you don't need to do this-you can make the file in some other way if you choose to. note that you can also do this from within an interactive job. more instructions at the above link.

test.pbs is just a minimal thing, where you need to submit the same command but with some value that needs to be incremented 1000 times (e.g. 1000 different jobs).

effvol.pbs is more involved, and shows some important steps if your job produces a lot of output, where you use the $TMPDIR or the pbs workdir. (if you don't know what that is, you probably don't need to use it). each command in this file stores an output file to the $TMPDIR directory. this directory is accessed faster than the directories where you store your files, and so your jobs run faster. at the end of the script, all of the output files from all of the run jobs, are copied to my home directory, because $TMPDIR is deleted after each job. also this file shows the sourcing of a particular bash profile for submitted jobs (if you need this. some programs work differently when submitted than jobs run on the login nodes on ruby).

i recommend reading the above link for more information. the pcp is very useful on ruby! |

| Attachment 1: test.pbs

|

#!/bin/bash

#PBS -A PCON0003

#PBS -l walltime=01:00:00

#PBS -l nodes=1:ppn=20

touch commandfile

for value in {1..1000}

do

line="/path/to/your_command_to_run $value (arg1) (arg2)..(argn)"

echo ${line}>> commandfile

done

module load pcp

mpiexec parallel-command-processor commandfile

|

| Attachment 2: effvol.pbs

|

#!/bin/bash

#PBS -A PCON0003

#PBS -N effvol

#PBS -l walltime=01:00:00

#PBS -l nodes=1:ppn=20

#PBS -o ./log/out

#PBS -e ./log/err

source /users/PCON0003/osu10643/.bash_batch

cd $TMPDIR

touch effvolconf

for value in {1..1000}

do

line="/users/PCON0003/osu10643/app/geant/app/nrt -m /users/PCON0003/osu10643/app/geant/app/nrt/effvol.mac -f \"$TMPDIR/effvol$value.root\""

echo ${line}>> effvolconf

done

module load pcp

mpiexec parallel-command-processor effvolconf

cp $TMPDIR/*summary.root /users/PCON0003/osu10643/doc/root/summary/

|

|

|

33

|

Mon Feb 11 21:58:26 2019 |

Brian Clark | Get a quick start with icemc on OSC | Software | Follow the instructions in the attached "getting_started_with_anita.pdf" file to download icemc, compile it, generate results, and plot those results. |

| Attachment 1: sample_bash_profile.sh

|

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/.local/bin:$HOME/bin

export PATH

|

| Attachment 2: sample_bashrc.sh

|

# .bashrc

source bashrc_anita.sh

# we also want to set two more environment variables that ANITA needs

# you should update ICEMC_SRC_DIR and ICEMC_BUILD_DIR to wherever you

# downloaded icemc too

export ICEMC_SRC_DIR=/path/to/icemc #change this line!

export ICEMC_BUILD_DIR=/path/to/icemc #change this line!

export DYLD_LIBRARY_PATH=${ICEMC_SRC_DIR}:${ICEMC_BUILD_DIR}:${DYLD_LIBRARY_PATH}

|

| Attachment 3: test_plot.cc

|

//C++ includes

#include <iostream>

//ROOT includes

#include "TCanvas.h"

#include "TStyle.h"

#include "TH1D.h"

#include "TFile.h"

#include "TTree.h"

using namespace std;

int main(int argc, char *argv[])

{

if(argc<2)

{

cout << "Not enough arguments! Stop run. " << endl;

return -1;

}

/*

we're going to make a histogram, and set some parameters about it's X and Y axes

*/

TH1D *nuflavorint_hist = new TH1D("nuflavorint", "",3,1,4);

nuflavorint_hist->SetTitle("Neutrino Flavors");

nuflavorint_hist->GetXaxis()->SetTitle("Neutrino Flavors (1=e, 2=muon, 3=tau)");

nuflavorint_hist->GetYaxis()->SetTitle("Weigthed Fraction of Total Detected Events");

nuflavorint_hist->GetXaxis()->SetTitleOffset(1.2);

nuflavorint_hist->GetYaxis()->SetTitleOffset(1.2);

nuflavorint_hist->GetXaxis()->CenterTitle();

nuflavorint_hist->GetYaxis()->CenterTitle();

for(int i=1; i < argc; i++)

{ // loop over the input files

//now we are going to load the icefinal.root file and draw in the "passing_events" tree, which stores info

string readfile = string(argv[i]);

TFile *AnitaFile = new TFile(( readfile ).c_str());

cout << "AnitaFile" << endl;

TTree *passing_events = (TTree*)AnitaFile->Get("passing_events");

cout << "Reading AnitaFile..." << endl;

//declare three variables we are going to use later

int num_pass; // number of entries (ultra-neutrinos);

double weight; // weight of neutrino counts;

int nuflavorint; // neutrino flavors;

num_pass = passing_events->GetEntries();

cout << "num_pass is " << num_pass << endl;

/*PRIMARIES VARIABLES*/

//set the "branch" of the tree which stores specific pieces of information

passing_events->SetBranchAddress("weight", &weight);

passing_events->SetBranchAddress("nuflavor", &nuflavorint);

//loop over all the events in the tree

for (int k=0; k <=num_pass; k++)

{

passing_events->GetEvent(k);

nuflavorint_hist->Fill(nuflavorint, weight); //fill the histogram with this value and this weight

} // CLOSE FOR LOOP OVER NUMBER OF EVENTS

} // CLOSE FOR LOOP OVER NUMBER OF INPUT FILES

//set up some parameters to make things loo pretty

gStyle->SetHistFillColor(0);

gStyle->SetHistFillStyle(1);

gStyle->SetHistLineColor(1);

gStyle->SetHistLineStyle(0);

gStyle->SetHistLineWidth(2.5); //Setup plot Style

//make a "canvas" to draw on

TCanvas *c4 = new TCanvas("c4", "nuflavorint", 1100,850);

gStyle->SetOptTitle(1);

gStyle->SetStatX(0.33);

gStyle->SetStatY(0.87);

nuflavorint_hist->Draw("HIST"); //draw on it

//Save Plots

//make the line thicker and then save the result

gStyle->SetHistLineWidth(9);

c4->SaveAs("nuflavorint.png");

gStyle->SetHistLineWidth(2);

c4->SaveAs("nuflavorint.pdf");

delete c4; //clean up

return 0; //return successfully

}

|

| Attachment 4: bashrc_anita.sh

|

# .bashrc

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

module load cmake/3.11.4

module load gnu/7.3.0

export CC=`which gcc`

export CXX=`which g++`

export BOOST_ROOT=/fs/project/PAS0654/shared_software/anita/owens_pitzer/build/boost_build

export LD_LIBRARY_PATH=${BOOST_ROOT}/stage/lib:$LD_LIBRARY_PATH

export BOOST_LIB=$BOOST_ROOT/stage/lib

export LD_LIBRARY_PATH=$BOOST_LIB:$LD_LIBRARY_PATH

export ROOTSYS=/fs/project/PAS0654/shared_software/anita/owens_pitzer/build/root

eval 'source /fs/project/PAS0654/shared_software/anita/owens_pitzer/build/root/bin/thisroot.sh'

|

| Attachment 5: getting_running_with_anita_stuff.pdf

|

| Attachment 6: getting_running_with_anita_stuff.pptx

|

| Attachment 7: test_plot.mk

|

# Makefile for the ROOT test programs. # This Makefile shows nicely how to compile and link applications

# using the ROOT libraries on all supported platforms.

#

# Copyright (c) 2000 Rene Brun and Fons Rademakers

#

# Author: Fons Rademakers, 29/2/2000

include Makefile.arch

################################################################################

# Site specific flags

################################################################################

# Toggle these as needed to get things to install

#BOOSTFLAGS = -I boost_1_48_0

# commented out for kingbee and older versions of gcc

ANITA3_EVENTREADER=1

# Uncomment to enable healpix

#USE_HEALPIX=1

# Uncomment to disable explicit vectorization (but will do nothing if ANITA_UTIL is not available)

#VECTORIZE=1

# The ROOT flags are added to the CXXFLAGS in the .arch file

# so this should be simpler...

ifeq (,$(findstring -std=, $(CXXFLAGS)))

ifeq ($(shell test $(GCC_MAJOR) -lt 5; echo $$?),0)

ifeq ($(shell test $(GCC_MINOR) -lt 5; echo $$?),0)

CXXFLAGS += -std=c++0x

else

CXXFLAGS += -std=c++11

endif

endif

endif

################################################################################

# If not compiling with C++11 (or later) support, all occurrences of "constexpr"

# must be replaced with "const", because "constexpr" is a keyword

# which pre-C++11 compilers do not support.

# ("constexpr" is needed in the code to perform in-class initialization

# of static non-integral member objects, i.e.:

# static const double c_light = 2.99e8;

# which works in C++03 compilers, must be modified to:

# static constexpr double c_light = 2.99e8;

# to work in C++11, but adding "constexpr" breaks C++03 compatibility.

# The following compiler flag defines a preprocessor macro which is

# simply:

# #define constexpr const

# which replaces all instances of the text "constexpr" and replaces it

# with "const".

# This preserves functionality while only affecting very specific semantics.

ifeq (,$(findstring -std=c++1, $(CXXFLAGS)))

CPPSTD_FLAGS = -Dconstexpr=const

endif

# Uses the standard ANITA environment variable to figure

# out if ANITA libs are installed

ifdef ANITA_UTIL_INSTALL_DIR

ANITA_UTIL_EXISTS=1

ANITA_UTIL_LIB_DIR=${ANITA_UTIL_INSTALL_DIR}/lib

ANITA_UTIL_INC_DIR=${ANITA_UTIL_INSTALL_DIR}/include

LD_ANITA_UTIL=-L$(ANITA_UTIL_LIB_DIR)

LIBS_ANITA_UTIL=-lAnitaEvent -lRootFftwWrapper

INC_ANITA_UTIL=-I$(ANITA_UTIL_INC_DIR)

ANITA_UTIL_ETC_DIR=$(ANITA_UTIL_INSTALL_DIR)/etc

endif

ifdef ANITA_UTIL_EXISTS

CXXFLAGS += -DANITA_UTIL_EXISTS

endif

ifdef VECTORIZE

CXXFLAGS += -DVECTORIZE -march=native -fabi-version=0

endif

ifdef ANITA3_EVENTREADER

CXXFLAGS += -DANITA3_EVENTREADER

endif

ifdef USE_HEALPIX

CXXFLAGS += -DUSE_HEALPIX `pkg-config --cflags healpix_cxx`

LIBS += `pkg-config --libs healpix_cxx`

endif

################################################################################

GENERAL_FLAGS = -g -O2 -pipe -m64 -pthread

WARN_FLAGS = -W -Wall -Wextra -Woverloaded-virtual

# -Wno-unused-variable -Wno-unused-parameter -Wno-unused-but-set-variable

CXXFLAGS += $(GENERAL_FLAGS) $(CPPSTD_FLAGS) $(WARN_FLAGS) $(ROOTCFLAGS) $(INC_ANITA_UTIL)

DBGFLAGS = -pipe -Wall -W -Woverloaded-virtual -g -ggdb -O0 -fno-inline

DBGCXXFLAGS = $(DBGFLAGS) $(ROOTCFLAGS) $(BOOSTFLAGS)

LDFLAGS += $(CPPSTD_FLAGS) $(LD_ANITA_UTIL) -I$(BOOST_ROOT) -L.

LIBS += $(LIBS_ANITA_UTIL)

# Mathmore not included in the standard ROOT libs

LIBS += -lMathMore

DICT = classdict

OBJS = vector.o position.o earthmodel.o balloon.o icemodel.o signal.o ray.o Spectra.o anita.o roughness.o secondaries.o Primaries.o Tools.o counting.o $(DICT).o Settings.o Taumodel.o screen.o GlobalTrigger.o ChanTrigger.o SimulatedSignal.o EnvironmentVariable.o source.o random.o

BINARIES = test_plot$(ExeSuf)

################################################################################

.SUFFIXES: .$(SrcSuf) .$(ObjSuf) .$(DllSuf)

all: $(BINARIES)

$(BINARIES): %: %.$(SrcSuf) $(OBJS)

$(LD) $(CXXFLAGS) $(LDFLAGS) $(OBJS) $< $(LIBS) $(OutPutOpt) $@

@echo "$@ done"

.PHONY: clean

clean:

@rm -f $(BINARIES)

%.$(ObjSuf) : %.$(SrcSuf) %.h

@echo "<**Compiling**> "$<

$(LD) $(CXXFLAGS) -c $< -o $@

|

|

|

34

|

Tue Feb 26 16:19:20 2019 |

Julie Rolla | All of the group GitHub account links | Software | ANITA Binned Analysis: https://github.com/osu-particle-astrophysics/BinnedAnalysis

GENETIS Bicone: https://github.com/mclowdus/BiconeEvolution

GENETIS Dipole: https://github.com/hchasan/XF-Scripts

ANITA Build tool: https://github.com/anitaNeutrino/anitaBuildTool

ANITA Hackathon: https://github.com/anitaNeutrino/hackathon2017

ICEMC: https://github.com/anitaNeutrino/icemc

Brian's Github: https://github.com/clark2668?tab=repositories

Note that you *may* need permissions for some of these. Please email Lauren (ennesser.1@buckeyemail.osu.edu ), Julie (JulieRolla@gmail.com), AND Brian (clark.2668@buckeyemail.osu.edu ) if you have any issues with permssions. Please state which GitHub links you are looking to view. |

|

|

37

|

Tue May 14 10:38:08 2019 |

Amy | Getting started with AraSim | Software | Attached is a set of slides on Getting Started with QC, a simulation monitoring project. It has instructions on getting started in using a terminal window, and downloading, compiling and running AraSim, the simulation program for the ARA project. AraSim has moved from the SVN repository to github, and so now you should be able to retrieve it, compile it using:

git clone https://github.com/ara-software/AraSim.git

cd AraSim

make

./AraSim

It will run without arguments, but the output might be silly. You can follow the instructions for running AraSim that are in the qc_Intro instructions, which will give them not silly results. Those parts are still correct.

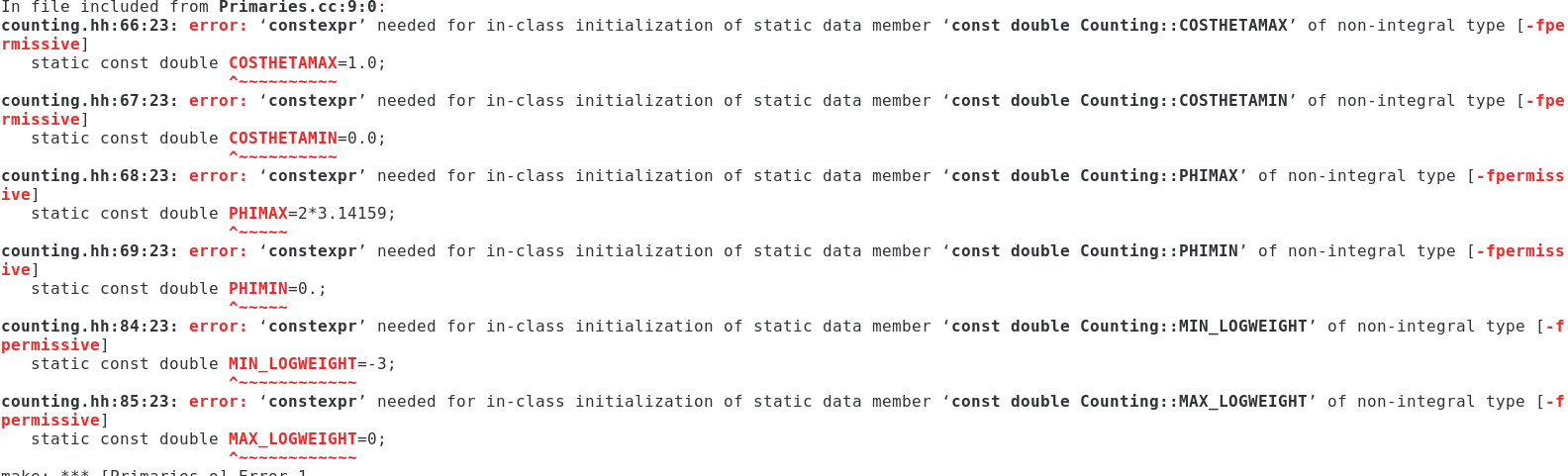

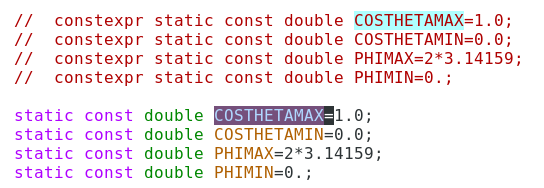

You might get some const expr errors if you are using ROOT 6, such as the ones in the first screen grab below. As mentioned in the error messages, you need to change from const expr to not. A few examples are shown in the next screen grab.

If you are here, you likely would also want to know how to install the prerequisites themselves. You might find this entry helpful then: http://radiorm.physics.ohio-state.edu/elog/How-To/4. It is only technically applicable to an older version that is designed for compatibility with ROOT5, but it will give you the idea.

These instructions are also superceded by an updated presentation at http://radiorm.physics.ohio-state.edu/elog/How-To/38

|

| Attachment 1: intro_to_qc.pdf

|

| Attachment 2: Screenshot_from_2019-05-14_10-54-48.png

|  |

| Attachment 3: Screenshot_from_2019-05-14_10-56-19.png

|  |

|

|

38

|

Wed May 15 00:38:54 2019 |

Brian Clark | Get a quick start with AraSim on osc | Software | Follow the instructions in the attached "getting_started_with_ara.pdf" file to download AraSim, compile it, generate results, and plot those results. |

| Attachment 1: plotting_example.cc

|

#include <iostream>

#include <fstream>

#include <sstream>

#include <math.h>

#include <string>

#include <stdio.h>

#include <stdlib.h>

#include <vector>

#include <time.h>

#include <cstdio>

#include <cstdlib>

#include <unistd.h>

#include <cstring>

#include <unistd.h>

#include "TTreeIndex.h"

#include "TChain.h"

#include "TH1.h"

#include "TF1.h"

#include "TF2.h"

#include "TFile.h"

#include "TRandom.h"

#include "TRandom2.h"

#include "TRandom3.h"

#include "TTree.h"

#include "TLegend.h"

#include "TLine.h"

#include "TROOT.h"

#include "TPostScript.h"

#include "TCanvas.h"

#include "TH2F.h"

#include "TText.h"

#include "TProfile.h"

#include "TGraphErrors.h"

#include "TStyle.h"

#include "TMath.h"

#include "TVector3.h"

#include "TRotation.h"

#include "TSpline.h"

#include "Math/InterpolationTypes.h"

#include "Math/Interpolator.h"

#include "Math/Integrator.h"

#include "TGaxis.h"

#include "TPaveStats.h"

#include "TLatex.h"

#include "Constants.h"

#include "Settings.h"

#include "Position.h"

#include "EarthModel.h"

#include "Tools.h"

#include "Vector.h"

#include "IceModel.h"

#include "Trigger.h"

#include "Spectra.h"

#include "signal.hh"

#include "secondaries.hh"

#include "Ray.h"

#include "counting.hh"

#include "Primaries.h"

#include "Efficiencies.h"

#include "Event.h"

#include "Detector.h"

#include "Report.h"

using namespace std;

/////////////////Plotting Script for nuflavorint for AraSIMQC

/////////////////Created by Kaeli Hughes, modified by Brian Clark

/////////////////Prepared on April 28 2016 as an "introductor script" to plotting with AraSim; for new users of queenbee

class EarthModel;

int main(int argc, char **argv)

{

gStyle->SetOptStat(111111); //this is a selection of statistics settings; you should do some googling and figure out exactly what this particular comibation does

gStyle->SetOptDate(1); //this tells root to put a date and timestamp on whatever plot we output

if(argc<2){ //if you don't have at least 1 file to run over, then you haven't given it a file to analyze; this checks this

cout<<"Not enough arguments! Abort run."<<endl;

}

//Create the histogram

TCanvas *c2 = new TCanvas("c2", "nuflavorint", 1100,850); //make a canvas on which to plot the data

TH1F *nuflavorint_hist = new TH1F("nuflavorint_hist", "nuflavorint histogram", 3, 0.5, 3.5); //create a histogram with three bins

nuflavorint_hist->GetXaxis()->SetNdivisions(3); //set the number of divisions

int total_thrown=0; // a variable to hold the grant total number of events thrown for all input files

for(int i=1; i<argc;i++){ //loop over the input files

string readfile; //create a variable called readfile that will hold the title of the simulation file

readfile = string(argv[i]); //set the readfile variable equal to the filename

Event *event = 0; //create a Event class pointer called event; note that it is set equal to zero to avoid creating a bald pointer

Report *report=0; //create a Event class pointer called event; note that it is set equal to zero to avoid creating a bald pointer

Settings *settings = 0; //create a Event class pointer called event; note that it is set equal to zero to avoid creating a bald pointer

TFile *AraFile=new TFile(( readfile ).c_str()); //make a new file called "AraFile" that will be simulation file we are reading in

if(!(AraFile->IsOpen())) return 0; //checking to see if the file we're trying to read in opened correctly; if not, bounce out of the program

int num_pass;//number of passing events

TTree *AraTree=(TTree*)AraFile->Get("AraTree"); //get the AraTree

TTree *AraTree2=(TTree*)AraFile->Get("AraTree2"); //get the AraTree2

AraTree2->SetBranchAddress("event",&event); //get the event branch

AraTree2->SetBranchAddress("report",&report); //get the report branch

AraTree->SetBranchAddress("settings",&settings); //get the settings branch

num_pass=AraTree2->GetEntries(); //get the number of passed events in the data file

AraTree->GetEvent(0); //get the first event; sometimes the tree does not instantiate properly if you'd explicitly "active" the first event

total_thrown+=(settings->NNU); //add the number of events from this file (The NNU variable) to the grand total; NNU is the number of THROWN neutrinos

for (int k=0; k<num_pass; k++){ //going to fill the histograms for as many events as were in this input file

AraTree2->GetEvent(k); //get the even from the tree

int nuflavorint; //make the container variable

double weight; //the weight of the event

int trigger; //the global trigger value for the event

nuflavorint=event->nuflavorint; //draw the event out; one of the objects in the event class is the nuflavorint, and this is the syntax for accessing it

weight=event->Nu_Interaction[0].weight; //draw out the weight of the event

trigger=report->stations[0].Global_Pass; //find out if the event was a triggered event or not

/*

if(trigger!=0){ //check if the event triggered

nuflavorint_hist->Fill(nuflavorint,weight); //fill the event into the histogram; the first argument of the fill (which is mandatory) is the value you're putting into the histogram; the second value is optional, and is the weight of the event in the histogram

//in this particular version of the code then, we are only plotting the TRIGGERED events; if you wanted to plot all of the events, you could instead remove this "if" condition and just Fill everything

}

*/

nuflavorint_hist->Fill(nuflavorint,weight); //fill the event into the histogram; the first argument of the fill (which is mandatory)

}

}

//After looping over all the files, make the plots and save them

//do some stuff to get the "total events thrown" text box ready; this is useful because on the plot itself you can then see how many events you THREW into the simulation; this is especially useful if you're only plotting passed events, but want to know what fraction of your total thrown that is

char *buffer= (char*) malloc (250); //declare a buffer pointer, and allocate it some chunk of memory

int a = snprintf(buffer, 250,"Total Events Thrown: %d",total_thrown); //print the words "Total Events Thrown: %d" to the variable "buffer" and tell the system how long that phrase is; the %d sign tells C++ to replace that "%d" with the next argument, or in this case, the number "total_thrown"

if(a>=250){ //if the phrase is longer than the pre-allocated space, increase the size of the buffer until it's big enough

buffer=(char*) realloc(buffer, a+1);

snprintf(buffer, 250,"Total Events Thrown: %d",total_thrown);

}

TLatex *u = new TLatex(.3,.01,buffer); //create a latex tex object which we can draw on the cavas

u->SetNDC(kTRUE); //changes the coordinate system for the tex object plotting

u->SetIndiceSize(.1); //set the size of the latex index

u->SetTextSize(.025); //set the size of the latex text

nuflavorint_hist->Draw(); //draw the histogram

nuflavorint_hist->GetXaxis()->SetTitle("Neutrino Flavor"); //set the x-axis label

nuflavorint_hist->GetYaxis()->SetTitle("Number of Events (weighted)"); //set the y-axis label

nuflavorint_hist->GetYaxis()->SetTitleOffset(1.5); //set the separation between the y-axis and it's label; root natively makes this smaller than is ideal

nuflavorint_hist->SetLineColor(kBlack); //set the color of the histogram to black, instead of root's default navy blue

u->Draw(); //draw the statistics box information

c2->SaveAs("outputs/plotting_example.png"); //save the canvas as a JPG file for viewing

c2->SaveAs("outputs/plotting_example.pdf"); //save the canvas as a PDF file for viewing

c2->SaveAs("outputs/plotting_example.root"); //save the canvas as a ROOT file for viewing or editing later

} //end main; this is the end of the script

|

| Attachment 2: plotting_example.mk

|

#############################################################################

## Makefile -- New Version of my Makefile that works on both linux

## and mac os x

## Ryan Nichol <rjn@hep.ucl.ac.uk>

##############################################################################

##############################################################################

##############################################################################

##

##This file was copied from M.readGeom and altered for my use 14 May 2014

##Khalida Hendricks.

##

##Modified by Brian Clark for use on plotting_example on 28 April 2016

##

##Changes:

##line 54 - OBJS = .... add filename.o .... del oldfilename.o

##line 55 - CCFILE = .... add filename.cc .... del oldfilename.cc

##line 58 - PROGRAMS = filename

##line 62 - filename : $(OBJS)

##

##

##############################################################################

##############################################################################

##############################################################################

include StandardDefinitions.mk

#Site Specific Flags

ifeq ($(strip $(BOOST_ROOT)),)

BOOST_ROOT = /usr/local/include

endif

SYSINCLUDES = -I/usr/include -I$(BOOST_ROOT)

SYSLIBS = -L/usr/lib

DLLSUF = ${DllSuf}

OBJSUF = ${ObjSuf}

SRCSUF = ${SrcSuf}

CXX = g++

#Generic and Site Specific Flags

CXXFLAGS += $(INC_ARA_UTIL) $(SYSINCLUDES)

LDFLAGS += -g $(LD_ARA_UTIL) -I$(BOOST_ROOT) $(ROOTLDFLAGS) -L.

# copy from ray_solver_makefile (removed -lAra part)

# added for Fortran to C++

LIBS = $(ROOTLIBS) -lMinuit $(SYSLIBS)

GLIBS = $(ROOTGLIBS) $(SYSLIBS)

LIB_DIR = ./lib

INC_DIR = ./include

#ROOT_LIBRARY = libAra.${DLLSUF}

OBJS = Vector.o EarthModel.o IceModel.o Trigger.o Ray.o Tools.o Efficiencies.o Event.o Detector.o Position.o Spectra.o RayTrace.o RayTrace_IceModels.o signal.o secondaries.o Settings.o Primaries.o counting.o RaySolver.o Report.o eventSimDict.o plotting_example.o

CCFILE = Vector.cc EarthModel.cc IceModel.cc Trigger.cc Ray.cc Tools.cc Efficiencies.cc Event.cc Detector.cc Spectra.cc Position.cc RayTrace.cc signal.cc secondaries.cc RayTrace_IceModels.cc Settings.cc Primaries.cc counting.cc RaySolver.cc Report.cc plotting_example.cc

CLASS_HEADERS = Trigger.h Detector.h Settings.h Spectra.h IceModel.h Primaries.h Report.h Event.h secondaries.hh #need to add headers which added to Tree Branch

PROGRAMS = plotting_example

all : $(PROGRAMS)

plotting_example : $(OBJS)

$(LD) $(OBJS) $(LDFLAGS) $(LIBS) -o $(PROGRAMS)

@echo "done."

#The library

$(ROOT_LIBRARY) : $(LIB_OBJS)

@echo "Linking $@ ..."

ifeq ($(PLATFORM),macosx)

# We need to make both the .dylib and the .so

$(LD) $(SOFLAGS)$@ $(LDFLAGS) $(G77LDFLAGS) $^ $(OutPutOpt) $@

ifneq ($(subst $(MACOSX_MINOR),,1234),1234)

ifeq ($(MACOSX_MINOR),4)

ln -sf $@ $(subst .$(DllSuf),.so,$@)

else

$(LD) -dynamiclib -undefined $(UNDEFOPT) $(LDFLAGS) $(G77LDFLAGS) $^ \

$(OutPutOpt) $(subst .$(DllSuf),.so,$@)

endif

endif

else

$(LD) $(SOFLAGS) $(LDFLAGS) $(G77LDFLAGS) $(LIBS) $(LIB_OBJS) -o $@

endif

##-bundle

#%.$(OBJSUF) : %.$(SRCSUF)

# @echo "<**Compiling**> "$<

# $(CXX) $(CXXFLAGS) -c $< -o $@

%.$(OBJSUF) : %.C

@echo "<**Compiling**> "$<

$(CXX) $(CXXFLAGS) $ -c $< -o $@

%.$(OBJSUF) : %.cc

@echo "<**Compiling**> "$<

$(CXX) $(CXXFLAGS) $ -c $< -o $@

# added for fortran code compiling

%.$(OBJSUF) : %.f

@echo "<**Compiling**> "$<

$(G77) -c $<

eventSimDict.C: $(CLASS_HEADERS)

@echo "Generating dictionary ..."

@ rm -f *Dict*

rootcint $@ -c ${INC_ARA_UTIL} $(CLASS_HEADERS) ${ARA_ROOT_HEADERS} LinkDef.h

clean:

@rm -f *Dict*

@rm -f *.${OBJSUF}

@rm -f $(LIBRARY)

@rm -f $(ROOT_LIBRARY)

@rm -f $(subst .$(DLLSUF),.so,$(ROOT_LIBRARY))

@rm -f $(TEST)

#############################################################################

|

| Attachment 3: test_setup.txt

|

NFOUR=1024

EXPONENT=21

NNU=300 // number of neutrino events

NNU_PASSED=10 // number of neutrino events that are allowed to pass the trigger

ONLY_PASSED_EVENTS=0 // 0 (default): AraSim throws NNU events whether or not they pass; 1: AraSim throws events until the number of events that pass the trigger is equal to NNU_PASSED (WARNING: may cause long run times if reasonable values are not chosen)

NOISE_WAVEFORM_GENERATE_MODE=0 // generate new noise waveforms for each events

NOISE_EVENTS=16 // number of pure noise waveforms

TRIG_ANALYSIS_MODE=0 // 0 = signal + noise, 1 = signal only, 2 = noise only

DETECTOR=1 // ARA stations 1 to 7

NOFZ=1

core_x=10000

core_y=10000

TIMESTEP=5.E-10 // value for 2GHz actual station value

TRIG_WINDOW=1.E-7 // 100ns which is actual testbed trig window

POWERTHRESHOLD=-6.06 // 100Hz global trig rate for 3 out of 16 ARA stations

POSNU_RADIUS=3000

V_MIMIC_MODE=0 // 0 : global trig is located center of readout windows

DATA_SAVE_MODE=0 // 2 : don't save any waveform informations at all

DATA_LIKE_OUTPUT=0 // 0 : don't save any waveform information to eventTree

BORE_HOLE_ANTENNA_LAYOUT=0

SECONDARIES=0

TRIG_ONLY_BH_ON=0

CALPULSER_ON=0

USE_MANUAL_GAINOFFSET=0

USE_TESTBED_RFCM_ON=0

NOISE_TEMP_MODE=0

TRIG_THRES_MODE=0

READGEOM=0 // reads geometry information from the sqlite file or not (0 : don't read)

TRIG_MODE=0 // use vpol, hpol separated trigger mode. by default N_TRIG_V=3, N_TRIG_H=3. You can change this values

number_of_stations=1

core_x=10000

core_y=10000

DETECTOR=1

DETECTOR_STATION=2

DATA_LIKE_OUTPUT=0

NOISE_WAVEFORM_GENERATE_MODE=0 // generates new waveforms for every event

NOISE=0 //flat thermal noise

NOISE_CHANNEL_MODE=0 //using different noise temperature for each channel

NOISE_EVENTS=16 // number of noise events which will be store in the trigger class for later use

ANTENNA_MODE=1

APPLY_NOISE_FIGURE=0

|

| Attachment 4: bashrc_anita.sh

|

# .bashrc

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

module load cmake/3.11.4

module load gnu/7.3.0

export CC=`which gcc`

export CXX=`which g++`

export BOOST_ROOT=/fs/project/PAS0654/shared_software/anita/owens_pitzer/build/boost_build

export LD_LIBRARY_PATH=${BOOST_ROOT}/stage/lib:$LD_LIBRARY_PATH

export BOOST_LIB=$BOOST_ROOT/stage/lib

export LD_LIBRARY_PATH=$BOOST_LIB:$LD_LIBRARY_PATH

export ROOTSYS=/fs/project/PAS0654/shared_software/anita/owens_pitzer/build/root

eval 'source /fs/project/PAS0654/shared_software/anita/owens_pitzer/build/root/bin/thisroot.sh'

|

| Attachment 5: sample_bash_profile.sh

|

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/.local/bin:$HOME/bin

export PATH

|

| Attachment 6: sample_bashrc.sh

|

# .bashrc

source bashrc_anita.sh

# we also want to set two more environment variables that ANITA needs

# you should update ICEMC_SRC_DIR and ICEMC_BUILD_DIR to wherever you

# downloaded icemc too

export ICEMC_SRC_DIR=/path/to/icemc #change this line!

export ICEMC_BUILD_DIR=/path/to/icemc #change this line!

export DYLD_LIBRARY_PATH=${ICEMC_SRC_DIR}:${ICEMC_BUILD_DIR}:${DYLD_LIBRARY_PATH}

|

| Attachment 7: getting_running_with_ara_stuff.pdf

|

| Attachment 8: getting_running_with_ara_stuff.pptx

|

|

|

39

|

Thu Jul 11 10:05:37 2019 |

Justin Flaherty | Installing PyROOT for Python 3 on Owens | Software | In order to get PyROOT working for Python 3, you must build ROOT with a flag that specifies Python 3 in the installation. This method will create a folder titled root-6.16.00 in your current directory, so organize things how you see fit. Then the steps are relatively simple:

wget https://root.cern/download/root_v6.16.00.source.tar.gz

tar -zxf root_v6.16.00.source.tar.gz

cd root-6.16.00

mkdir obj

cd obj

cmake .. -Dminuit2=On -Dpython3=On

make -j8

If you wish to do a different version of ROOT, the steps should be the same:

wget https://root.cern/download/root_v<version>.source.tar.gz

tar -zxf root_v<version>.source.tar.gz

cd root-<version>

mkdir obj

cd obj

cmake .. -Dminuit2=On -Dpython3=On

make -j8 |

|

|

Draft

|

Fri Jan 31 10:43:52 2020 |

Jorge Torres | Mounting ARA software on OSC through CernVM File System | Software | OSC added ARA's CVMFS repository on Pitzer and Owens. This has been already done in UW's cluster thanks to Ben Hokanson-Fasig and Brian Clark. With CVMFS, all the dependencies are compiled and stored in a single folder (container), meaning that the user can just source the paths to the used environmental variables and not worry about installing them at all. This is very useful, since it usually takes a considerable amount of time to get those dependencies and properly install/debug the ARA software. To use the software, all you have to do is:

source /cvmfs/ara.opensciencegrid.org/trunk/centos7/setup.sh

To verify that the containter was correctly loaded, type

and see if the root display pops up. You can also go to /cvmfs/ara.opensciencegrid.org/trunk/centos7/source/AraSim and execute ./AraSim

Because of it being a container, the permissions are read-only. This means that if you want to do any modifications to the existing code, you'll have to copy the piece of code that you want, and change the enviromental variables of that package, in this case $ARA_UTIL_INSTALL_DIR, which is the destination where you want your executables, libraries and such, installed.

Libraries and executables are stored here, in case you want to reference those dependencies as your environmental variables: /cvmfs/ara.opensciencegrid.org/trunk/centos7/

Even if you're not in the ARA collaboration, you can benefit from this through the fact that ROOT6 is installed and compiled in the container. In order to use it you just need to run the same bash command, and ROOT 6 will be available for you to use.

Feel free to email any questions to Brian Clark or myself.

--------

Technical notes:

The ARA software was compiled with gcc version 4.8.5. In OSC, that compiler can be loaded by doing module load gnu/4.8.5. If you're using any other compiler, you'll get a warning telling you that if you do any compilation against the ARA software, you may need to add the -D_GLIBCXX_USE_CXX11_ABI=0 flag to your Make file. |

|

|

45

|

Fri Feb 4 13:06:25 2022 |

William Luszczak | "Help! AnitaBuildTools/PueoBuilder can't seem to find FFTW!" | Software | Disclaimer: This might not be the best solution to this problem. I arrived here after a lot of googling and stumbling across this thread with a similar problem for an unrelated project: https://github.com/xtensor-stack/xtensor-fftw/issues/52. If you're someone who actually knows cmake, maybe you have a better solution.

When compiling both pueoBuilder and anitaBuildTools, I have run into a cmake error that looks like:

CMake Error at /apps/cmake/3.17.2/share/cmake-3.17/Modules/FindPackageHandleStandardArgs.cmake:164 (message):

Could NOT find FFTW (missing: FFTW_LIBRARIES)

(potentially also missing FFTW_INCLUDES). Directing CMake to the pre-existing FFTW installations on OSC does not seem to do anything to resolve this error. From what I can tell, this might be related to how FFTW is built, so to get around this we need to build our own installation of FFTW using cmake instead of the recommended build process. To do this, grab the whatever version of FFTW you need from here: http://www.fftw.org/download.html (for example, I needed 3.3.9). Untar the source file into whatever directory you're working in:

tar -xzvf fftw-3.3.9.tar.gz

Then make a build directory and cd into it:

mkdir install

cd install

Now build using cmake, using the flags shown below.

cmake -DCMAKE_INSTALL_PREFIX=$(path_to_install_loc) -DBUILD_SHARED_LIBS=ON -DENABLE_OPENMP=ON -DENABLE_THREADS=ON ../fftw-3.3.9

For example, I downloaded and untarred the source file in `/scratch/wluszczak/fftw/`, and my install prefix was `/scratch/wluszczak/fftw/install/`. In principle this installation prefix can be anywhere you have write access, but for the sake of organization I usually try to keep everything in one place.

Once you have configured cmake, go ahead and install:

make install -j $(nproc)

Where $(nproc) is the number of threads you want to use. On OSC I used $(nproc)=4 for compiling the ANITA tools and it finished in a reasonable amount of time.

Once this has finished, cd to your install directory and remove everything except the `include` and `lib64` folders:

cd $(path_to_install_dir) #You might already be here if you never left

rm *

rm -r CMakeFiles

Now we need to rebuild with slightly different flags:

cmake -DCMAKE_INSTALL_PREFIX=$(path_to_install_loc) -DBUILD_SHARED_LIBS=ON -DENABLE_OPENMP=ON -DENABLE_THREADS=ON -DENABLE_FLOAT=ON ../fftw-3.3.9

make install -j $(nproc)

At the end of the day, your fftw install directory should have the following files:

include/fftw3.f

include/fftw3.f03

include/fftw3.h

include/fftw3l.f03

include/fftw3q.f03

lib64/libfftw3f.so

lib64/libfftw3f_threads.so.3

lib64/libfftw3_omp.so.3.6.9

lib64/libfftw3_threads.so

lib64/libfftw3f_omp.so

lib64/libfftw3f.so.3

lib64/libfftw3f_threads.so.3.6.9

lib64/libfftw3.so

lib64/libfftw3_threads.so.3

lib64/libfftw3f_omp.so.3

lib64/libfftw3f.so.3.6.9

lib64/libfftw3_omp.so

lib64/libfftw3.so.3

lib64/libfftw3_threads.so.3.6.9

lib64/libfftw3f_omp.so.3.6.9

lib64/libfftw3f_threads.so

lib64/libfftw3_omp.so.3

lib64/libfftw3.so.3.6.9

Once fftw has been installed, export your install directory (the one with the include and lib64 folders) to the following environment variable:

export FFTWDIR=$(path_to_install_loc)

Now you should be able to cd to your anitaBuildTools directory (or pueoBuilder directory) and run their associated build scripts:

./buildAnita.sh

or:

./pueoBuilder.sh

And hopefully your tools will magically compile (or at least, you'll get a new set of errors that are no longer related to this problem).

If you're running into an error that looks like:

CMake Error: The following variables are used in this project, but they are set to NOTFOUND.

Please set them or make sure they are set and tested correctly in the CMake files:

FFTWF_LIB (ADVANCED)

then pueoBuilder/anitaBuildTools can't seem to find your fftw installation (or files that are supposed to be included in that installation), try rebuilding FFTW with different flags according to which files it seems to think are missing.

If it seems like pueoBuilder can't seem to find your FFTW installation at all (i.e. you're getting some error that looks like missing: FFTW_LIBRARIES or missing: FFTW_INCLUDES, check the environment variables that are supposed to point to your local FFTW installation (`$FFTWDIR`) and make sure there are the correct files in the `lib` and `include` subdirectories. |

|

|

47

|

Mon Apr 24 11:51:42 2023 |

William Luszczak | PUEO simulation stack installation instructions | Software | These are instructions I put together as I was first figuring out how to compile PueoSim/NiceMC. This was originally done on machines running CentOS 7, however has since been replicated on the OSC machines (running RedHat 7.9 I think?). I generally try to avoid any `module load` type prerequisites, instead opting to compile any dependencies from source. You _might_ be able to get this to work by `module load`ing e.g. fftw, but try this at your own peril.

#pueoBuilder Installation Tutorial

This tutorial will guide you through the process of building the tools included in pueoBuilder from scratch, including the prerequisites and any environment variables that you will need to set. This sort of thing is always a bit of a nightmare process for me, so hopefully this guide can help you skip some of the frustration that I ran into. I did not have root acces on the system I was building on, so the instructions below are what I had to do to get things working with local installations. If you have root access, then things might be a bit easier. For reference I'm working on CentOS 7, other operating systems might have different problems that arise.

##Prerequisites

As far as I can tell, the prerequisites that need to be built first are:

-Python 3.9.18 (Apr. 6 2024 edit by Jason Yao, needed for ROOT 6.26-14)

-cmake 3.21.2 (I had problems with 3.11.4)

-gcc 11.1.0 (9.X will not work) (update 4/23/24: If you are trying to compile ROOT 6.30, you might need to downgrade to gcc 10.X, see note about TBB in "Issues I ran into" at the end)

-fftw 3.3.9

-gsl 2.7.1 (for ROOT)

-ROOT 6.24.00

-OneTBB 2021.12.0 (if trying to compile ROOT 6.30)

###CMake

You can download the source files for CMake here: https://cmake.org/download/. Untar the source files with:

tar -xzvf cmake-3.22.1.tar.gz

Compiling CMake is as easy as following the directions on the website: https://cmake.org/install/, but since we're doing a local build, we'll use the `configure` script instead of the listed `bootstrap` script. As an example, suppose that I downloaded the above tar file to `/scratch/wluszczak/cmake`:

mkdir install

cd cmake-3.22.1

./configure --prefix=/scratch/wluszczak/cmake/install

make

make install

You should additionally add this directory to your `$PATH` variable:

export PATH=/scratch/wluszczak/cmake/install/bin:$PATH

To check to make sure that you are using the correct version of CMake, run:

cmake --version

and you should get:

cmake version 3.22.1

CMake suite maintained and supported by Kitware (kitware.com/cmake).

### gcc 11.1.0

Download the gcc source from github here: https://github.com/gcc-mirror/gcc/tags. I used the 11.1.0 release, though there is a more recent 11.2.0 release that I have not tried. Once you have downloaded the source files, untar the directory:

tar -xzvf gcc-releases-gcc-11.1.0.tar.gz

Then install the prerequisites for gcc:

cd gcc-releases-gcc-11.1.0

contrib/download_prerequisites

One of the guides I looked at also recommended installing flex separately, but I didn't seem to need to do this, and I'm not sure how you would go about it without root priviledges, though I imagine it's similar to the process for all the other packages here (download the source and then build by providing an installation prefix somewhere)

After you have installed the prerequisites, create a build directory:

cd ../

mkdir build

cd build

Then configure GCC for compilation like so:

../gcc-releases-gcc-11.1.0/configure -v --prefix=/home/wluszczak/gcc-11.1.0 --enable-checking=release --enable-languages=c,c++,fortran --disable-multilib --program-suffix=-11.1

I don't remember why I installed to my home directory instead of the /scratch/ directories used above. In principle the installation prefix can go wherever you have write access. Once things have configured, compile gcc with:

make -j $(nproc)

make install

Where `$(nproc)` is the number of processing threads you want to devote to compilation. More threads will run faster, but be more taxing on your computer. For reference, I used 8 threads and it took ~15 min to finish.

Once gcc is built, we need to set a few environment variables:

export PATH=/home/wluszczak/gcc-11.1.0/bin:$PATH

export LD_LIBRARY_PATH=/home/wluszczak/gcc-11.1.0/lib64:$LD_LIBRARY_PATH

We also need to make sure cmake uses this compiler:

export CC=/home/wluszczak/gcc-11.1.0/bin/gcc-11.1

export CXX=/home/wluszczak/gcc-11.1.0/bin/g++-11.1

export FC=/home/wluszczak/gcc-11.1.0/bin/gfortran-11.1

If your installation prefix in the configure command above was different, substitute that directory in place of `/home/wluszczak/gcc-11.1.0` for all the above export commands. To easily set these variables whenever you want to use gcc-11.1.0, you can stick these commands into a single shell script:

#load_gcc11.1.sh

export PATH=/home/wluszczak/gcc-11.1.0/bin:$PATH